Scroll down for Interactive Code Environment 👇

In the fourth chapter of my Deep Learning Foundations series, I’m thrilled to introduce the concept of hidden layers. These layers are the backbone of a neural network’s ability to learn complex patterns. By weaving a hidden layer into our network, I’ll illuminate the computations that power these sophisticated architectures, showcasing how they enhance the network’s predictive prowess. Lets dive into the architectural nuances that make neural networks such potent learning machines. Building on the foundational knowledge from previous posts, I aim to enrich your understanding of neural network design and functionality.

Deep Dive into Hidden Layers

The exploration of hidden layers marks a significant chapter in understanding of neural networks. Unlike the visible input and output layers, hidden layers work behind the scenes to transform data in complex ways, enabling networks to capture and model intricate patterns. Let’s unpack the concept and computational dynamics of hidden layers, and how they empower neural networks to solve advanced problems.

- Unveiling Hidden Layers: At their core, hidden layers are what differentiate a superficial model from one capable of deep learning. These layers allow the network to learn features at various levels of abstraction, making them indispensable for complex problem-solving. By introducing hidden layers, we significantly boost the network’s capability to interpret data beyond what is immediately observable, facilitating a gradual, layer-by-layer transformation towards the desired output.

- Navigating Through Computations: The journey from input through hidden layers to output involves a series of calculated steps. Each neuron in a hidden layer applies a weighted sum of its inputs, adds a bias, and then passes this value through an activation function like ReLU. This process not only introduces non-linearity but also allows the network to learn and adapt from data in a multi-dimensional space. Our focus will be on understanding how data is transformed as it propagates through these layers, and how activation functions play a pivotal role in shaping the network’s learning behavior.

- Exploring the Weights and Nodes Dynamics: The relationship between weights and nodes is fundamental to how information is processed and learned within the network. Weights determine the strength of the connection between nodes in different layers, influencing how much of the signal is passed through. Adjusting these weights is how neural networks learn from data over time. In this section, we’ll delve into the mechanics of weight adjustment and its impact on the network’s accuracy and learning efficiency.

For those eager to see these concepts in action, check out the interactive code example which offers a hands-on experience with the mechanics of embedding a hidden layer within a neural network. Through this practical demonstration, you’ll gain insight into the nuances of network operation and the transformative power of hidden layers.

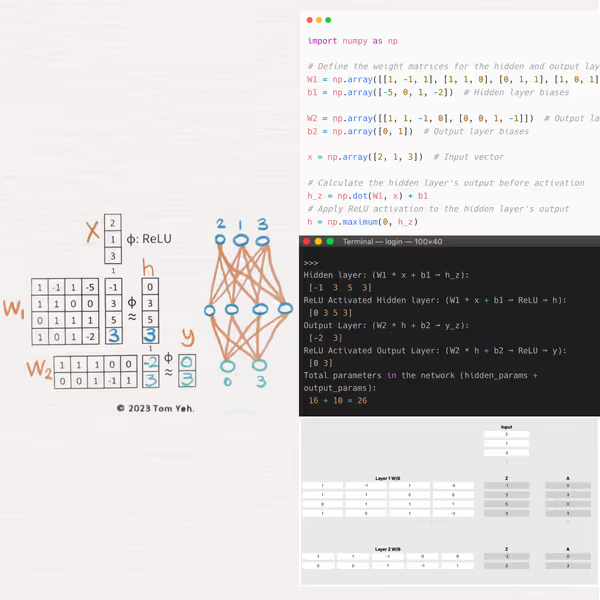

Walking Through the Code

In this segment, I’m excited to dissect a Python script that simulates a neural network with a hidden layer, emphasizing the function and impact of ReLU activation. This walkthrough is designed to shed light on the critical elements that allow a neural network to process and learn from data effectively.

-

Initiating with NumPy:

- Our journey starts with importing

numpyasnp, a fundamental library for numerical computation in Python. This tool is crucial for managing array operations efficiently, a common task in deep learning algorithms.

- Our journey starts with importing

-

Activating with ReLU:

- I define the

relu(x)function to apply the ReLU (Rectified Linear Unit) activation, a simple but profound mechanism that introduces non-linearity by converting negative values to zero. This step is essential for enabling the network to capture complex patterns.

- I define the

-

Constructing the Two-Layer Network:

- At the core of this exploration, the

two_layer_networkorchestrates the interaction between the input, hidden layer, and output. This function illustrates how data flows through the network, undergoing transformation and activation.-

First Layer Transformation: The script begins by transforming the input vector

xusing the first layer’s weightsW1and biasesb1. The dot product ofW1andxplusb1yields the pre-activation values for the hidden layer, which are then passed through the ReLU function to achieve activation. -

Second Layer Transformation: The activated hidden layer output

his subsequently processed by the second layer’s weightsW2and biasesb2. Similar to the first layer, lets calculate the dot product ofW2andhplusb2, followed by ReLU activation to obtain the final outputy.

-

- At the core of this exploration, the

-

Examining Network Parameters:

- An integral part of understanding a neural network’s complexity and capacity is analyzing its parameters. The script calculates the total number of parameters by considering the weights and biases across both layers.

-

Executing and Interpreting Results:

- With the network fully defined, executing the

two_layer_networkfunction simulates the processing of input through the hidden to the output layer. The outcomes, both before and after ReLU activation, are printed, offering insights into the network’s operational dynamics.

- With the network fully defined, executing the

-

Visualizing the Process:

- By meticulously detailing each computational step and visualizing the data flow, this walkthrough aims to clarify how hidden layers contribute to a neural network’s ability to learn. It highlights the transformational journey of input data as it moves through the network, emerging as a learned output.

Theoretical Insights and Practical Application

This detailed code walkthrough bridges the gap between theoretical neural network concepts and their practical implementation. By dissecting the network layer by layer and focusing on the ReLU activation function, I hope you gained a deeper appreciation for the intricacies of neural computations. It’s a step toward demystifying the complex operations that enable neural networks to perform tasks ranging from simple classifications to understanding the nuances of human language and beyond.

Interactive Code Environment

Original Inspiration

This journey was sparked by the insightful exercises created by Dr. Tom Yeh for his graduate courses at the University of Colorado Boulder. He’s a big advocate for hands-on learning, and so am I. After realizing the scarcity of practical exercises in deep learning, he took it upon himself to develop a set that covers everything from basic concepts like the one discussed today to more advanced topics. His dedication to hands-on learning has been a huge inspiration to me.

Conclusion

With this exploration of hidden layers complete, it’s clear that these intermediate layers are more than just stepping stones between input and output—they’re the very essence of what enables neural networks to tackle problems with unparalleled complexity and depth. The journey through understanding hidden layers, from their conceptual foundation to their practical application, reveals the incredible versatility and power of neural networks. Through the lens of ReLU activation and the detailed walkthrough of a two-layer network, we’ve seen firsthand how these models evolve from simple constructs to intricate systems capable of learning and adapting with astonishing precision. Hidden layers stand as a testament to the progress and potential of neural network design, offering a glimpse into the future possibilities of AI. As you can continue to push the boundaries of what these models can achieve, the insights gained from understanding hidden layers will undoubtedly serve as a cornerstone for future innovations. In the next installment of my Deep Learning Foundation Series, I’ll venture further into the architecture of neural networks, exploring advanced concepts that build upon the foundations laid by hidden layers. Stay tuned on this fascinating journey into the heart of neural computation, unlocking new levels of understanding and capability in the process.

- Last Post: Unraveling Four-Neuron Networks

- Next Post: Batch Processing Three Inputs

LinkedIn Post: Coding by Hand: Understanding Hidden Layers

About Jeremy London

Based in Denver and fill my days with creativity and curiosity. When I'm not snowboarding fresh powder, I'm in the kitchen chasing the perfect recipe or playing my guitar. I love to tinker, experimenting with flavors, sounds, and ideas, always looking for ways to turn the ordinary into something a little more extraordinary.