Scroll down for Interactive Code Environment 👇

Embarking on the third installment of my Deep Learning Foundations series, I find myself at the threshold of a more complex and captivating topic: the four-neuron network. Having previously unraveled the basics of a single neuron’s function, it’s now time to venture deeper into the cooperative world of multiple neurons. This progression from single-neuron analysis to the exploration of four-nueron network signifies a pivotal advancement in the journey to decode the complexities of neural networks.

Diving Deeper: The Four-Neuron Network Unveiled

The spotlight now turns to a sophisticated subject matter: the architecture and interplay within a four-neuron layer. Building upon the foundational knowledge from our exploration of the single-neuron model, I’m set to broaden our scope and delve into the synergy of multiple neurons within a network. This exploration will underscore the essential roles played by matrix operations and ReLU activation in orchestrating neural network behaviors.

Far from merely adding complexity, this exploration into this network is a gateway to understanding the underlying collaborative mechanisms of neural networks. By dissecting the contribution of each neuron to the layer’s collective output, I intend to demystify neural computations, shedding light on the intricate engineering that empowers neural networks to tackle tasks with astounding complexity. This is indispensable for those aiming to unlock the full capabilities of neural networks, offering us insights needed to devise more intricate and efficient models.

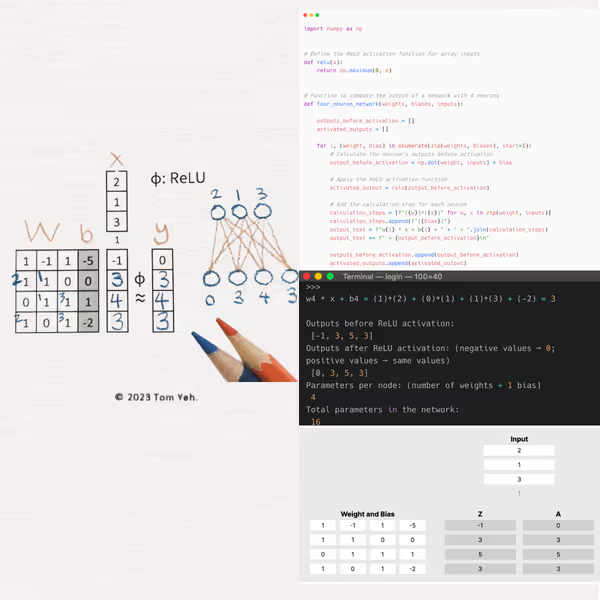

Walking Through the Code

In this section, I’m excited to walk you through a Python script that brings to life a four-neuron network layer. This code will not only showcase how inputs are collectively processed but also emphasize the network’s augmented computational prowess in the added layer of complexity it introduces.

-

Leveraging NumPy for Complex Operations:

- I initiate our exploration by leveraging NumPy, an essential library for efficiently conducting matrix operations. These operations are the cornerstone of neural network computations, particularly crucial when navigating the interactions of a multi-neuron layer.

-

Understanding Matrix Multiplication:

- The core of our four-neuron layer’s computation lies in matrix multiplication. I’ll show you how multiplying the input vector by the weight matrix, followed by adding the bias vector, calculates the pre-activation outputs. This matrix encapsulates the weights and biases of each neuron in the layer, forming the basis for their collective output.

-

Integrating Non-Linearity with ReLU:

- After obtaining the linear outputs, we introduce non-linearity by applying the ReLU activation function to the entire output vector. This crucial step allows our network to interpret complex patterns and data relationships, significantly enhancing its predictive capabilities.

-

Visualizing the Interactions:

- To demystify the process, I’ll dissect these operations, aiming to clarify how the neurons within a layer influence one another and contribute to the network’s functionality.

-

Running the Simulation:

- Finally, I execute the model to observe the output of the four-neuron layer after applying ReLU activation. This practical demonstration cements my understanding of the theoretical principles underpinning neural network functionality.

Interactive Code Environment

This code meticulously calculates the output for each neuron in the four-neuron layer before and after ReLU activation. It first computes the dot product of weights and biases with the inputs to produce the non-activated Z matrix, and then applies ReLU to derive the final output matrix A. This helps showcase the interplay of weights, biases, and inputs through mathematical operations.

Original Inspiration

This journey was sparked by the insightful exercises created by Dr. Tom Yeh for his graduate courses at the University of Colorado Boulder. He’s a big advocate for hands-on learning, and so am I. After realizing the scarcity of practical exercises in deep learning, he took it upon himself to develop a set that covers everything from basic concepts like the one discussed today to more advanced topics. His dedication to hands-on learning has been a huge inspiration to me.

Conclusion

Exploring a four-neuron network layer has broadened our understanding of neural networks, emphasizing the critical role of matrix operations and ReLU activation in modeling complex data relationships. As we progress, we’ll delve deeper into network architectures, unraveling the mysteries of deep learning layer by layer. Plus, I’ll include a link to a LinkedIn post where we can dive into discussions and share thoughts.

- Last Post: Understanding Single Neuron Networks

- Next Post: Understanding Hidden Layers

LinkedIn Post: Coding by Hand: Four Neuron Networks in Python

About Jeremy London

Based in Denver and fill my days with creativity and curiosity. When I'm not snowboarding fresh powder, I'm in the kitchen chasing the perfect recipe or playing my guitar. I love to tinker, experimenting with flavors, sounds, and ideas, always looking for ways to turn the ordinary into something a little more extraordinary.