Scroll down for Interactive Code Environment 👇

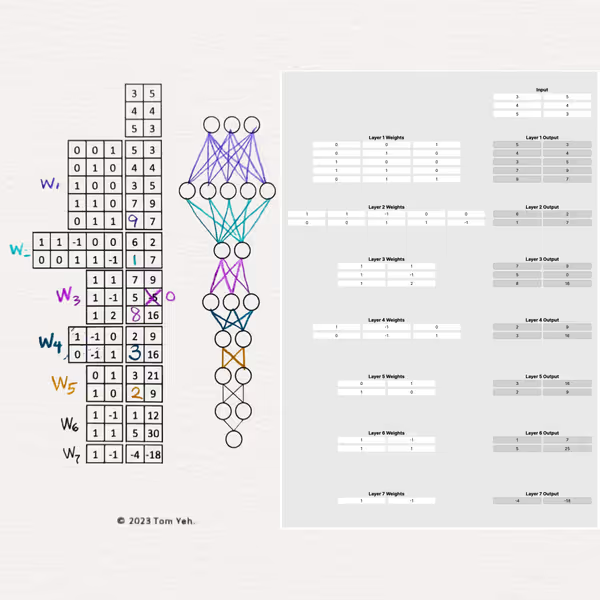

Diving deeper into the next part of the Deep Learning Foundations Series, I explored a way to deepen my understanding of the inner workings of Multi-Layer Perceptrons (MLPs), building on the knowledge of neural network architectures and constructing a seven layer network! Lets go through each of the layers of this complex mechanism, and explore the interactive example to demonstrating the MLP’s intricate design and functionality.

What is a Multi-Layer Perceptron?

-

Understanding MLP Structure: At its core, an MLP consists of multiple layers of nodes, each layer fully connected to the next. This structure allows for the modeling of complex, non-linear relationships between inputs and outputs.

-

Simplifying Assumptions for Clarity: To ease this journey, I adopt two simplifications: zero biases across all nodes and direct application of the ReLU activation function, excluding the output layer. These assumptions helps me focus on the network’s depth and its computational process without distrations from additional complexities.

-

Layered Computations: The example network, with seven layers, serves as a playground for computational practice. Now I can explore how inputs transition through the network, transforming via weights and activations, to produce outputs across each layer.

Walking Through the Code:

To translate the the theoretical exploration into practical understanding, I utilize an interactive Python script to model a seven-layer MLP in numpy and break down each weight group and layer into seperate calculations.

Initial Setup:

Lets start by defining the network’s architecture, laying out the nodes in each layer, their connections, and the initial inputs. This foundation is crucial for understanding the subsequent computational steps.

Forward Pass:

-

Layer-by-Layer Transformation: Examine how inputs are processed through each layer, with specific attention to the application of weights and the ReLU activation function. This step-by-step approach demystifies the network’s operation, showcasing the transformation of inputs into outputs.

-

Computational Insights: Special focus is given to the handling of negative values by ReLU and the propagation of data through the network, culminating in the calculation of the output layer.

Interactive Code Environment

Utilizing this interactive environment, you can now tweak inputs, weights, and see real-time changes in the network’s output. This hands-on component emphasizes learning through doing, and help solidify the concepts of a MLP network.

Original Inspiration

The inspiration for this deep dive came from a series of hands-on exercises shared on LinkedIn shared by Dr. Tom Yeh. He designed it to break down the complexities of deep learning into manageable, understandable parts. In particular focusing on a seven-layer MLP, highlight the power of interactive learning in a nueral network. By engaging directly with the components of a neural network, it starts to show how you can see beyond the ‘black box’ and grasp the nuanced mechanisms at play.

Conclusion

The journey through Multi-Layer Perceptrons (MLPs) in the Deep Learning Foundations Series has been an enlightening exploration, bridging the gap between abstract neural network concepts and tangible, impactful applications in our world. MLPs, with their intricate layering and computational depth, play a pivotal role in powering technologies that touch nearly every aspect of our lives—from enhancing the way we communicate through natural language processing to driving innovations in autonomous vehicles.

A key to unlocking the potential of MLPs lies in understanding forward propagation, the fundamental process that underpins how these networks learn and make predictions. Forward propagation is the heartbeat of a nueral network, which enables it to pass information through the network’s layers, transforming input data into actionable insights. This sequential journey from input to output, is what enables MLPs to perform tasks with astonishing accuracy and efficiency.

The applications of MLPs, empowered by forward propagation, are vast! In healthcare, they underpin systems that can predict patient outcomes, personalize treatments, and even identify diseases from medical imagery with greater accuracy than ever before. In the realm of finance, MLPs contribute to fraud detection algorithms that protect consumers, and in environmental science, they model complex climate patterns, helping researchers predict changes and mitigate risks.

Understanding forward propagation not only helps us understand how MLPs function but also reveals the intricate dance of mathematics and data that fuels modern AI innovations. It’s this foundational process that allows us to translate theoretical knowledge into applications that can forecast weather patterns, translate languages in real-time, and even explore the potential for personalized education platforms that adapt to individual learning styles.

- Last Post: Batch Processing Three Inputs

- Next Post: Backpropagation and Gradient Descent

LinkedIn Post: Coding by Hand: What are Multi-Layer Perceptrons?

About Jeremy London

Based in Denver and fill my days with creativity and curiosity. When I'm not snowboarding fresh powder, I'm in the kitchen chasing the perfect recipe or playing my guitar. I love to tinker, experimenting with flavors, sounds, and ideas, always looking for ways to turn the ordinary into something a little more extraordinary.